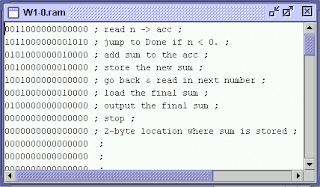

One of the earliest forms of programming was machine

language, which was used to communicate directly with the hardware of these

machines. However, this language was difficult for humans to use, as it

involved writing code in binary, a series of 0s and 1s that represented

instructions for the machine.

The first programming language that was designed for human

use was Fortran, which was developed in the 1950s by IBM. Fortran was used to

program scientific and engineering applications, and was designed to be easy

for scientists and engineers to use. It was a high-level language, meaning that

it used commands and syntax that were closer to human language, making it

easier to read and write than machine language.

In the 1960s and 1970s, a number of other programming

languages were developed, including COBOL, BASIC, and C. These languages were

used for a variety of applications, including business, education, and

scientific research.

In the 1980s and 1990s, the personal computer revolution

brought programming to a new level. The development of the Macintosh and the

IBM PC made computers more accessible to the general public, and new

programming languages were developed to take advantage of these new machines.

These languages, such as Pascal and C++, were designed to be easy to use, and

they provided a way for people to write their own software programs.

The rise of the Internet in the 1990s also had a major impact

on programming. Web-based programming languages such as HTML, JavaScript, and

PHP were developed to create dynamic web pages that could interact with users.

These languages made it possible for people to create their own websites and

online applications, and they paved the way for the development of e-commerce

and other online services.

Today, programming is used in a wide range of applications,

from scientific research to video games. There are hundreds of programming

languages available, each with its own strengths and weaknesses. Some

programming languages are designed for specific tasks, such as data analysis or

web development, while others are more general-purpose and can be used for a

variety of tasks.

The future of programming looks bright, as advances in

technology are making it easier and easier to create software applications.

Artificial intelligence and machine learning are being used to automate many

programming tasks, and new programming languages are being developed that are

even easier to use. The rise of cloud computing is also making it easier for

people to access powerful computing resources, and this is expected to lead to

the development of new and more advanced software applications.

Finally, the origin of programming can be traced back

to the late 19th and early 20th centuries, when the development of mechanical

calculators and punched card systems paved the way for the development of

programming languages. Today, programming is used in a wide range of

applications, and advances in technology are making it easier and easier to

create software applications. The future of programming looks bright, as

advances in artificial intelligence and cloud computing are expected to lead to

the development of new and more advanced software applications.

Comments

Post a Comment